AWS Global Accelerator

Hunter Fernandes

Software Engineer

A few months ago AWS introduced a new service called Global Accelerator.

AWS Cloudfront PoPs around the United States.

This service is designed to accept traffic at local edge locations (maybe the same Cloudfront PoPs?) and then route it over the AWS backbone to your service region. An interesting feature is that it does edge TCP termination, which can save latency on quite a few packet round trips.

Bear in mind, that after the TCP handshake, the TLS handshake is still required and that requires a round trip to the us-west-2 (Oregon) region regardless of the edge location used by Global Accelerator.

Performance Results

Of course I am a sucker for these “free” latency improvements, so I decided to give it a try and set it up on our staging environment. I asked a few coworkers around the United States to run some tests and here are the savings:

| Location | TCP Shake | TLS Handshake | Weighted Savings |

|---|---|---|---|

| Hunter @ San Francisco | -42 ms | -1 ms | -43 ms |

| Kevin @ Iowa | -63 ms | -43 ms | -131 ms |

| Matt @ Kentucky | -58 ms | -27 ms | -98 ms |

| Sajid @ Texas | -50 ms | +57 ms | -14 ms |

My own entry from San Francisco makes total sense. We see a savings on the time to set up the TCP connection because instead of having to roundtrip to Oregon, the connection can be set up in San Jose. It also makes sense that the TLS Handshake did not see any savings, as that still has to go to Oregon. The path from SF Bay Area to Oregon is pretty good, so there is not a lot of savings to be had there.

However, for Iowa and Kentucky, the savings are quite significant. This is because instead of transiting over the public internet, the traffic is now going over the AWS backbone.

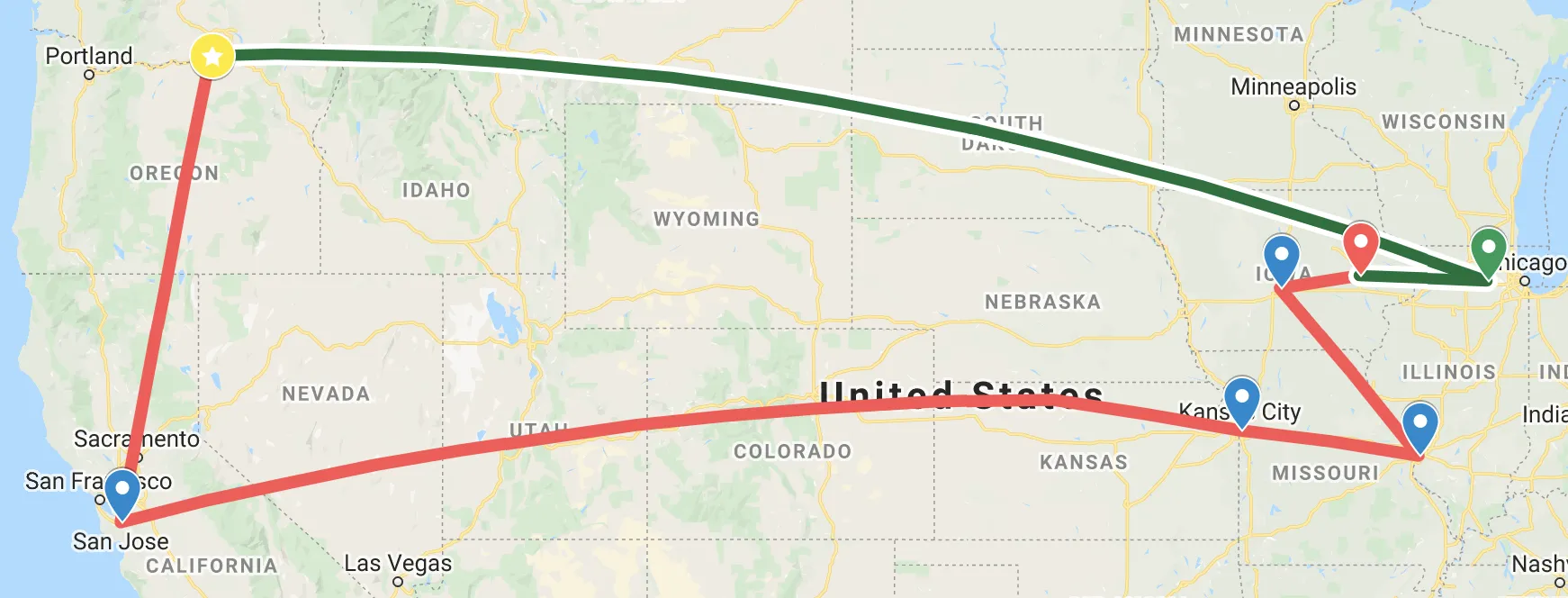

Here’s a traceroute from Iowa comparing the public internet to using Global Accelerator.

- Green is with Global Accelerator.

- Red is without Global Accelerator using the public internet.

You can see that the path is much shorter and more direct with Global Accelerator. Honestly, me using Iowa as a comparison here is a bit of a cheat, as you can see from the AWS PoP / Backbone map that there is a direct line from Iowa to Oregon.

But that is kind of the point? AWS is incentivized for performance reasons to create PoPs in places with lots of people. AWS is incentivized to build our their backbone to their own PoPs. Our customers are likely to be in placed with lots of people. Therefore Global Accelerator lets us reach our customers more directly and AWS is incentivized keep building that network out.

Where it gets weird is Texas. The TCP handshake is faster, but the TLS handshake is slower. I am not sure why this is. In fact, I checked with other coworkers from different areas in Texas and they had better results.

Production

I was happy with the results and decided to roll it out to production while keeping an eye on the metrics from Texas. We rolled it out to 5% of our traffic and everything seemed to be going well, so we rolled it out to 20% then 100% of our traffic.

We observed a 17% reduction in latency across the board and a 38% reduction in the 99th percentile latency. That is an amazing improvement for a service that is just a few clicks to set up.

I am pleased to say the data from Texas has improved as well. While I am not sure what the issue was, it seems to have resolved itself. Hopefully AWS will release some better network observation tools in the future to aid debugging these issues.