MySQL Proxies

Hunter Fernandes

Software Engineer

Our Django application connects to MySQL to store all of its data. This is a pretty typical setup. However, you may not know that establishing connections to MySQL is rather expensive.

MySQL Connections

I have written previously about controlling API response times — we take it very seriously! One thing that blows up response latencies at higher percentiles is when our application has to establish a new MySQL connection. This tacks on an additional 30-60ms of response time. This cost comes from the MySQL server-side — connections are internally expensive and require allocating buffers.

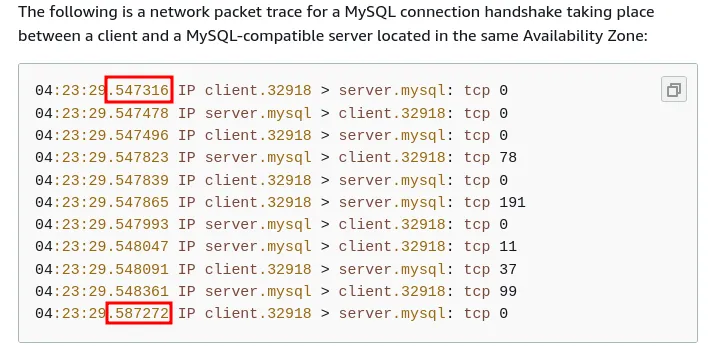

The network does not significantly contribute to the setup cost. Go ahead and set up a local MySQL server and take connection timings. You will still see 30ish milliseconds even over loopback! Even AWS’ own connection guide for debugging MySQL packets shows the handshake taking 40ms!

We have two other wrinkles:

- Django adds some preamble queries, so that adds further to connection setup costs.

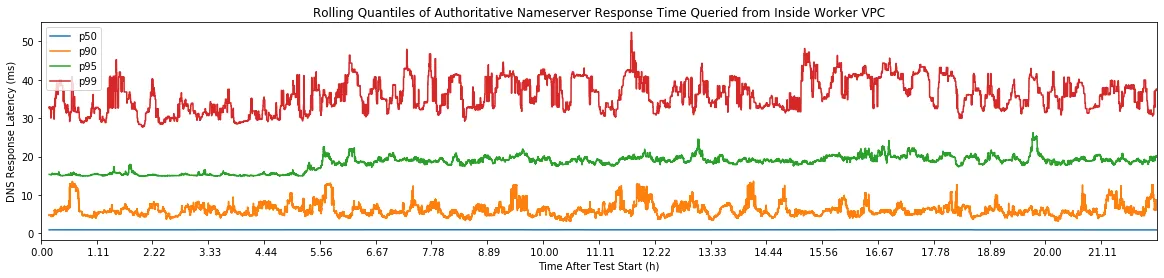

- We need to be able to gracefully (and quickly) recover from database failover. In practice, this means that we need to re-resolve the database hostname every time we connect. RDS DNS resolution is slow. I have the receipts! These instrumented numbers factor in cache hits.

All of this is to say that you want to reduce the number of times that you have to establish a MySQL connection.

The most straightforward way to do this is to reuse connections. And this actually works great up to a certain point. However, there are two quirks:

First, connections have server-side state that is expensive to maintain. A connection may take up a megabyte of ram on the database even if the connection is doing nothing. This number is highly sensitive to database configuration parameters. This cost is multiplied by thousands of connections.

Second, we have API processes and background processes that often times are doing nothing. Due to the nature of our workload, many of our services are busy and times when other services are not. In aggregate, we have a nice load pattern. But each particular service has a spiky load pattern. If we keep connections open forever, we are hogging database resources for connections that are not being used.

We have here a classic engineering tradeoff! Do we keep the connections open forever and hog database resources to ultimately minimize database connection attempts?

| Short Lifetimes | Long Lifetimes |

|---|---|

| Least idle waste ✅ | Most idle waste |

| Frequent reconnects | Minimal reconnects ✅ |

| Higher p95 | Lower p95 ✅ |

Database Proxies

But we want low idle waste while also having minimal reconnects and a lower p95. What do we do?

The answer is to use a database proxy. Instead of your clients connecting directly to the database server, the clients makes a “frontend connection” to the proxy. The proxy then establishes a matching “backend connection” to the real database server. Proxies (generally) talk MySQL wire protocol, so as far as your application code is concerned nothing has changed.

When your client is longer actively using the MySQL connection, the proxy will mark the backend connection as inactive. Next time a client wants to connect to the database via the proxy, the proxy will simply reuse the existing MySQL connection instead of creating a new one.

Thus, two (or more) client MySQL connections can be multiplexed onto a single real backend connection. The effect of this is that

- The number of connections to the MySQL database is cut down significantly, and

- Clients connecting to the proxy are serviced very quickly. Clients don’t have to wait for the slow backend connection setup.

The first wrinkle is that if both frontend connections want to talk to the database at the same time then the proxy has to either

- Wait for one frontend connection to become inactive before servicing the other (introducing wait time), or

- Spin up another backend connection so that both frontend connections can be serviced at the same time, which makes you still pay the connection setup price as well as the backend state price.

What the proxy actually does in this case depends on the configuration.

A second wrinkle occurs due to the highly-stateful nature of MySQL connections. There is a lot of backend state for a connection. The proxy needs to know about this state as well, or is it could errantly multiplex a frontend connection onto a backend connection where the expected states are misaligned. This is a fast way to get big issues.

To solve this, proxies track the state of each frontend and backend connection. When the proxy detects that a connection has done something to affect the state that tightly bounds the frontend to the backend connection, the proxy will “pin” the frontend to the backend and prevent backend reuse.

RDS Proxy

There are a few MySQL database proxies that are big in the FOSS ecosystem right now. The top two are sqlproxy (which is relatively new), and Vitess (where the proxy ability is just small part in a much larger project). Running a proxy yourself is adding more custom infrastructure that comes with its own headaches though. A managed/vendor-hosted version is better at our scale.

And, what do you know? AWS just released RDS Proxy with support for Aurora MySQL. I tried it out and found it… wanting.

Look for my experience with RDS proxy in a post coming soon!