Bootstrapping Trust on Kubernetes

We use Kubernetes at $work, and since I am in charge of platform, Kubernetes is my problem. Here's an interesting problem when trying to secure your Kubernetes workload.

Our pods need to talk to each other over the network. Early on, we decided that each pod would receive a unique identity in our application's authentication layer. This provides us maximum auditability -- we can tell exactly which pod performed any given network call. This extends up one level to the service layer as well. With this system, when a pod receives a network call it can tell:

- What service it is talking to, and

- Which pod it is talking to.

It can then allow or deny specific actions based on identity.

This is a great idea! So how do we implement it? The wrinkle is that when programs start, they have nothing. They know who they are. But how do they prove to other pods who they are?

Proving Identity

For ease of reading, I will name the two pods in this example Alice and Bob. Alice is the pod that wants to prove its identity to Bob.

In general, the way for Alice to prove it is, in fact, Alice is to present something that only that Alice could have. On Kubernetes, by default, a pod is granted a Kubernetes service account (SA) token. This token allows it to communicate with the Kubernetes API server.

So our first option is for Alice to send the SA token to Bob. Bob can inspect and check the SA token against the Kubernetes API server. If the token is valid, Bob knows the caller is Alice.

This is bad because now Bob has Alice's SA token. If Bob were a bad actor (or compromised), then

- Bob can use the SA token to issue Kubernetes API calls as Alice. Whatever Alice can do, Bob can do too under this scheme!

- Bob can submit the SA token to other services, which would then think Bob is Alice and allow Bob to act as Alice.

Either case is not acceptable to us. So, we need a way for Alice to prove its identity without giving away the secret to the counterparty.

Early Attempts

For the longest time, we compromised on this point by having a central authentication service (Bob in this example) that had access to read Kubernetes service account tokens.1 Alice would send a hashed version of the SA to Bob, and Bob would look through the Kubernetes service secrets and validate the hash matched what Kubernetes had on record for Alice.

This did not actually solve the problem: now the hash was the valuable McGuffin instead of the SA token. But at least it did reduce the value of the token being exchanged: now if there was a MITM attack between Alice and Bob, the attacker would only get the hash, not the actual SA token. But now Bob needs access to read ALL tokens! Terrible.

A better method is to have a chain of trust. But what is the root of the chain? We already have something that is the root of all trust: the Kubernetes API server.

Unfortunately, the Kubernetes API server did not have a method of issuing tokens that could be used to prove identity safely... until recently.

Token Projection & Review API

Kubernetes 1.20 GA'd Bound Service Account Tokens implemented through Token Projection and the Token Review API. This allows a pod to request a token that the Kuberenetes API server will inject into the pod as a file.

The most important part of this KEP (for our purposes) is the token can be arbitrarily scoped. This means that Alice can request a token that is scoped to only allow it to talk to Bob. Therefore, if Bob were compromised, the attacker would not be able to impersonate Alice to Charlie.

The Token Review API is the counterpart to Token Projection. It allows a pod to submit a token and a scope to the Kubernetes API server for validation. The API server is responsible for checking that the token is trusted and the scopes on the token match the submitted scopes.

This simplifies our wacky hashing scheme and god-mode service and turns it into a simple exchange:

- Alice reads the file mounted in the pod.

- Alice sends the token to Bob.

- Bob submits the token to the Kubernetes API server for validation with the bob scope.

- The Kubernetes API server validates the token and the scopes.

Just some file reading and some HTTP requests!

Concrete Example

Let's walk through a concrete example of this in action.

Alice is very simple:

---

kind: ServiceAccount

apiVersion: v1

metadata:

name: alice

namespace: default

---

kind: Pod

apiVersion: v1

metadata:

name: alice-pod

namespace: default

spec:

serviceAccountName: alice

containers:

- name: alice

image: alpine/k8s

command: ["/bin/sh"]

args: ["-c", "while true; do sleep 30; done;"]

volumeMounts:

- name: alice-token

mountPath: /var/run/secrets/hfernandes.com/mytoken

readOnly: true

volumes:

- name: alice-token

projected:

sources:

- serviceAccountToken:

path: token

expirationSeconds: 7200

audience: bob

---

Bob is a little more complicated. We must give it permission to talk to the Kubernetes Token Review API. Since Token Review is not namespaced, we give it a ClusterRole instead of a Role.

---

kind: ServiceAccount

apiVersion: v1

metadata:

name: bob

namespace: default

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: bob

rules:

- apiGroups: ["authentication.k8s.io"]

resources: ["tokenreviews"]

verbs: ["create"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: bob

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: bob

subjects:

- kind: ServiceAccount

name: bob

namespace: default

---

# Bob pod

kind: Pod

apiVersion: v1

metadata:

name: bob-pod

namespace: default

spec:

serviceAccountName: bob

containers:

- name: bob

image: alpine/k8s:1.25.15 # Already has kubectl installed

command: ["/bin/sh"]

args: ["-c", "while true; do sleep 30; done;"]

---

Now, if we look in alice-pod we find our token:

$ kubectl exec -it alice-pod -- sh

/ # ls /var/run/secrets/hfernandes.com/mytoken/

token

/ # cat /var/run/secrets/hfernandes.com/mytoken/token

XXXXXXXsecretXXXXXX.YYYYYYYYsecretYYYYYYYYY.ZZZZZZZZZZZZsecretZZZZZZZZZZZZZZ

Let's go into bob-pod and submit this token to the Kubernetes API server for validation:

$ kubectl exec -it bob-pod -- sh

/apps # cat tokenrequest.json

{

"apiVersion": "authentication.k8s.io/v1",

"kind": "TokenReview",

"spec": {

"audiences": ["bob"],

"token": "XXXX"

}

}

/apps # kubectl create --raw '/apis/authentication.k8s.io/v1/tokenreviews?pretty=true' -f tokenrequest.json

{

"kind": "TokenReview",

"apiVersion": "authentication.k8s.io/v1",

"spec": {

"token": "XXXXXXXsecretXXXXXX.YYYYYYYYsecretYYYYYYYYY.ZZZZZZZZZZZZsecretZZZZZZZZZZZZZZ",

"audiences": [

"bob"

]

},

"status": {

"authenticated": true,

"user": {

"username": "system:serviceaccount:default:alice",

"uid": "ffc54e8f-c23b-4a5c-920b-fc729796295d",

"groups": [

"system:serviceaccounts",

"system:serviceaccounts:default",

"system:authenticated"

],

"extra": {

"authentication.kubernetes.io/pod-name": [

"alice-pod"

],

"authentication.kubernetes.io/pod-uid": [

"75302c6d-90d6-4299-88e3-3ded4393471a"

]

}

},

"audiences": [

"bob"

]

}

}

What happens if we try to submit the token to the Kubernetes API server with the wrong scope (audience)?

/apps # jq '.spec.audiences[0] = "anotherscope" | .' tokenrequest.json > wrongscope.json

/apps # kubectl create --raw '/apis/authentication.k8s.io/v1/tokenreviews?pretty=true' -f wrongscope.json

{

"kind": "TokenReview",

"apiVersion": "authentication.k8s.io/v1",

"spec": {

"token": "XXXXXXXsecretXXXXXX.YYYYYYYYsecretYYYYYYYYY.ZZZZZZZZZZZZsecretZZZZZZZZZZZZZZ",

"audiences": [

"anotherscope"

]

},

"status": {

"user": {},

"error": "[invalid bearer token, token audiences [\"bob\"] is invalid for the target audiences [\"anotherscope\"], unknown]"

}

}

You can see that the API server rejected the token because the scope was wrong.

What's in the JWT token?

If we decode this JWT, we find:

{

"aud": [

"bob"

],

"exp": 1700115257,

"iat": 1700108057,

"iss": "https://oidc.eks.us-west-2.amazonaws.com/id/XXXXXXXXXXXXXXXXXXX",

"kubernetes.io": {

"namespace": "default",

"pod": {

"name": "alice-pod",

"uid": "75302c6d-90d6-4299-88e3-3ded4393471a"

},

"serviceaccount": {

"name": "alice",

"uid": "ffc54e8f-c23b-4a5c-920b-fc729796295d"

}

},

"nbf": 1700108057,

"sub": "system:serviceaccount:default:alice"

}

Note that the JWT is explicit about the serviceaccount being alice, whereas the Token Review API requires

us to parse that out of .status.user.username ("system:serviceaccount:default:alice"). That is kind of annoying. But both clearly contain the pod name.

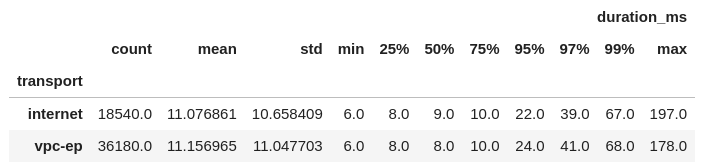

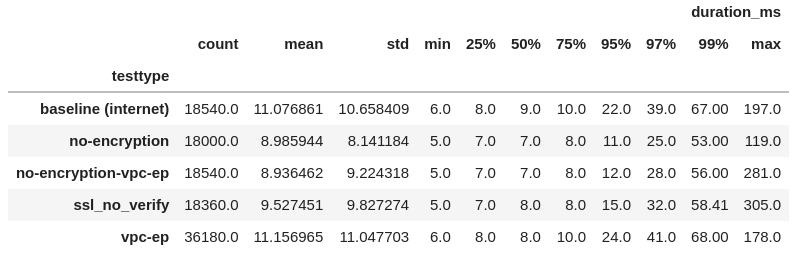

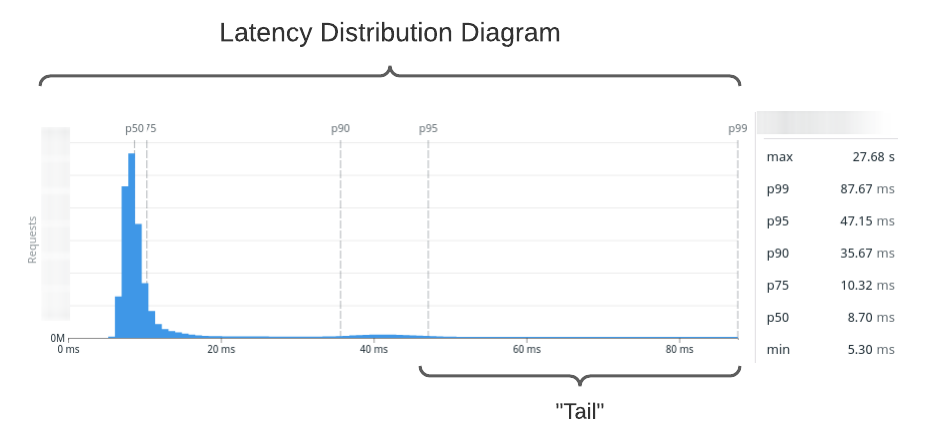

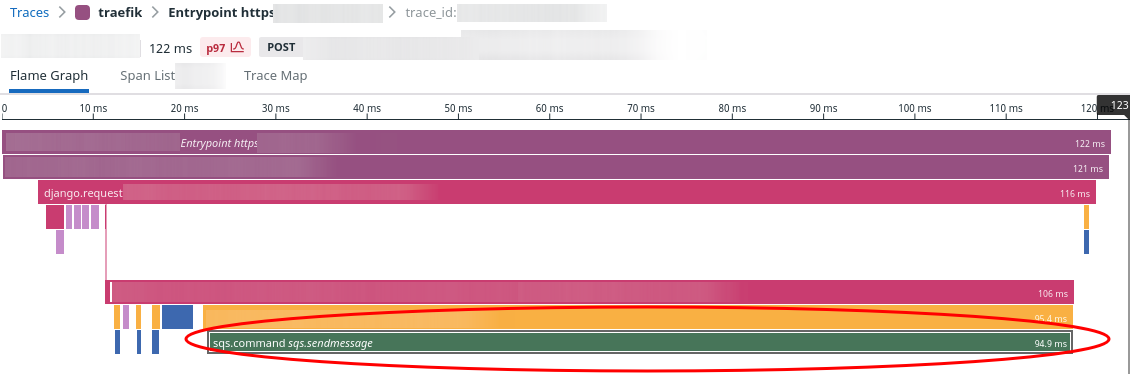

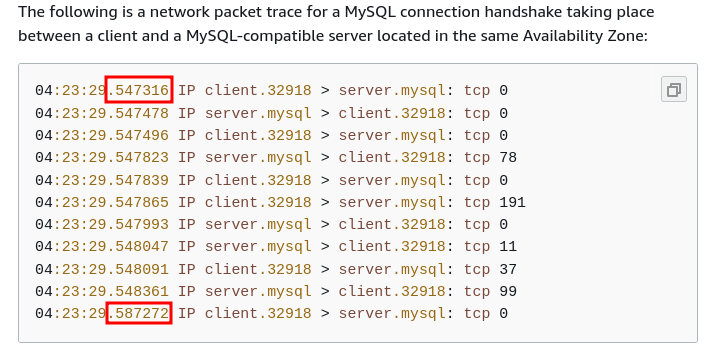

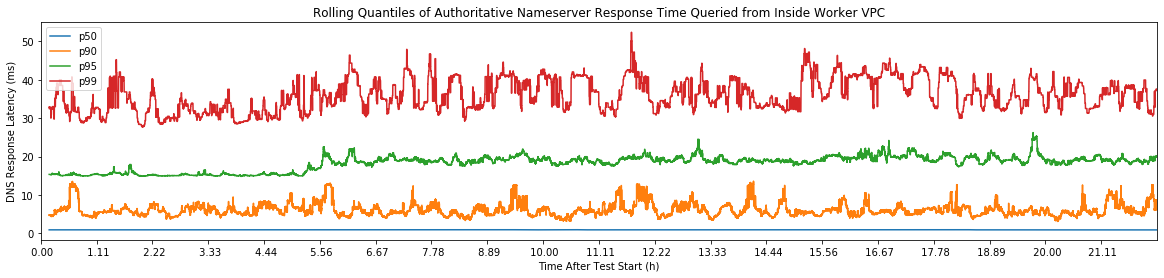

How fast is the Token Review API?

Let's check our token against the API Server 10 times and see how long it takes -- can we put this API on the hot path?

/apps # for i in $(seq 10); do kubectl create --raw '/apis/authentication.k8s.io/v1/tokenreviews?pretty=true' -f tokenrequest.json -v10 2>&1 | grep ServerProcessing; done

HTTP Statistics: DNSLookup 0 ms Dial 0 ms TLSHandshake 3 ms ServerProcessing 5 ms Duration 9 ms

HTTP Statistics: DNSLookup 0 ms Dial 0 ms TLSHandshake 4 ms ServerProcessing 2 ms Duration 7 ms

HTTP Statistics: DNSLookup 0 ms Dial 0 ms TLSHandshake 6 ms ServerProcessing 1 ms Duration 8 ms

HTTP Statistics: DNSLookup 0 ms Dial 0 ms TLSHandshake 4 ms ServerProcessing 4 ms Duration 9 ms

HTTP Statistics: DNSLookup 0 ms Dial 0 ms TLSHandshake 3 ms ServerProcessing 1 ms Duration 5 ms

HTTP Statistics: DNSLookup 0 ms Dial 0 ms TLSHandshake 3 ms ServerProcessing 1 ms Duration 6 ms

HTTP Statistics: DNSLookup 0 ms Dial 0 ms TLSHandshake 3 ms ServerProcessing 1 ms Duration 5 ms

HTTP Statistics: DNSLookup 0 ms Dial 0 ms TLSHandshake 3 ms ServerProcessing 1 ms Duration 5 ms

HTTP Statistics: DNSLookup 0 ms Dial 0 ms TLSHandshake 3 ms ServerProcessing 1 ms Duration 5 ms

HTTP Statistics: DNSLookup 0 ms Dial 0 ms TLSHandshake 4 ms ServerProcessing 5 ms Duration 10 ms

So typically 1-5ms if you keep a connection to the API server open (and don't have to do the TLS handshake). Not bad!

In our use case, we are only using this to bridge to our main authentication system, so we don't need to do this on the hot path. But it's fast enough we could!

Upgrading Our Interservice Authentication

When this API went GA, I jumped on the opportunity to upgrade our interservice authentication.

Implementing this in our application gave us great benefits. We were able to

- greatly simplify our code and logical flow,

- ditch our wacky hashing scheme,

- remove permissions from the god service that could read all service account token secrets,

- and increased security by scoping tokens, which removed an entire class of potential attacks.

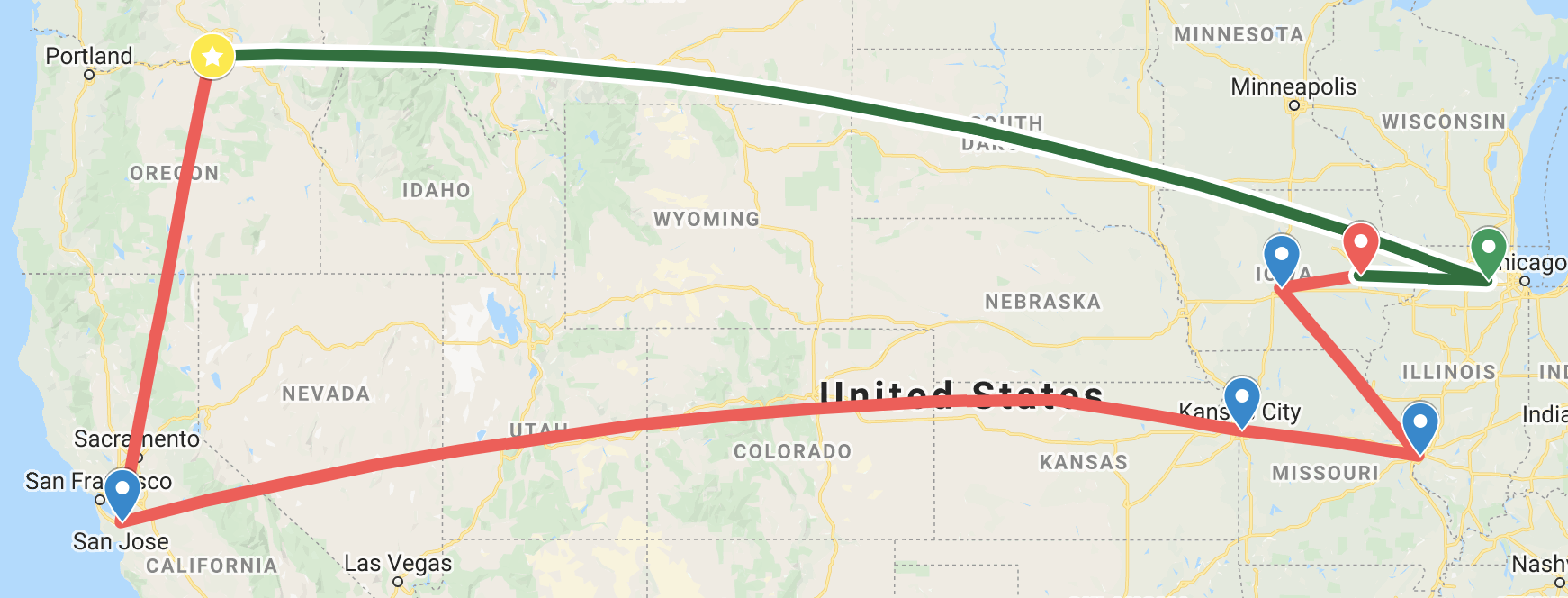

In addition to moving to Projected Service Account Tokens, we also added checking that the caller IP address matches the IP address of the pod that the token belongs to. This has the added benefit of preventing outside callers from attempting internal authentication at all.

ECS / Fargate Equivalent?

Kubernetes is notorious for having a fast, grueling upgrade cycle. So I am always keeping my ear to the ground to see how the alternatives are doing.

Two container orchestrators I have high hopes for are AWS Elastic Container Service and Fargate. The issue is I have not found a way to implement fine-grain per-container identity proofs in these systems. If you know of a way, please let me know!

Footnotes

-

Due to our application requirements, we had a central authentication service anyway, so this was not a huge deal. It was already under stricter security controls than the rest of the applications due to the sensitive nature of its data (eg, password hashes), so we felt comfortable enriching its cluster permissions. ↩

-f21ebb7a387e4abe81a9b92aba76a8ed.png)

-5111147b6c1dc040d8ef97a2366a70df.png)

-7cd5d20668ae8d5a47e657200d89ab0f.png)

.

.